Preamble

import numpy as np # for multi-dimensional containers

import pandas as pd # for DataFrames

import platypus as plat # multi-objective optimisation framework

Introduction

When preparing to implement multi-objective optimisation experiments, it's often more convenient to use a ready-made framework/library instead of programming everything from scratch. There are many libraries and frameworks that have been implemented in many different programming languages, but as we're using Python we will be selecting from frameworks such as DEAP, PyGMO, and Platypus.

With our focus on multi-objective optimisation, our choice is an easy one. We will choose Platypus which has a focus on multi-objective problems and optimisation.

Platypus is a framework for evolutionary computing in Python with a focus on multiobjective evolutionary algorithms (MOEAs). It differs from existing optimization libraries, including PyGMO, Inspyred, DEAP, and Scipy, by providing optimization algorithms and analysis tools for multiobjective optimization.

As a first look into Platypus, let's repeat the process covered in the earlier section on "Synthetic Objective Functions and ZDT1", where we randomly initialise a solution and then evaluate it using ZDT1.

The ZDT test function

Similar to the last time, we will be using a synthetic test problem throughout this notebook called ZDT1. It is part of the ZDT test suite, consisting of six different two-objective synthetic test problems. This is quite an old test suite, easy to solve, and very easy to visualise.

Mathematically, the ZDT11 two-objective test function can be expressed as:

where

and all decision variables fall between

For this bi-objective test function,

Let's start implementing this in Python, beginning with the initialisation of a solution according to Equations 2 and 3. In this case, we will have 30 problem variables

D = 30

x = np.random.rand(D)

print(x)

[0.3146922 0.18069651 0.8669023 0.90181727 0.51662277 0.22707642 0.88705032 0.34286548 0.41625942 0.70440383 0.03021398 0.70399831 0.00460576 0.36639118 0.57878733 0.99144161 0.17477581 0.76024859 0.18537833 0.65383628 0.35665724 0.45243136 0.97317158 0.46832485 0.86421827 0.27234544 0.31520583 0.16295762 0.64142004 0.49828092]

Now that we have a solution to evaluate, let's implement the ZDT1 synthetic test function using Equation 1.

def ZDT1(x):

f1 = x[0] # objective 1

g = 1 + 9 * np.sum(x[1:D] / (D - 1))

h = 1 - np.sqrt(f1 / g)

f2 = g * h # objective 2

return [f1, f2]

Finally, let's invoke our implemented test function using our solution

objective_values = ZDT1(x)

print(objective_values)

[0.314692198381057, 4.183957223498328]

Now we can see the two objective values that measure our solution

Using a Framework

We've quickly repeated our earlier exercise, where we move from our mathematical description of ZDT1 to an implementation in Python. Now, let's use the Platypus implementation of ZDT1, which will save us from having to write it, and other test functions, in Python ourselves.

We have already imported Platypus as plat above, so to get an instance of ZDT1 all we need to do is use the object constructor.

problem = plat.ZDT1()

Just like that, our variable problem references an instance of the ZDT1 test problem.

Now we need to create a solution in a structure that is defined by Platypus. This solution object is what Platypus expects when performing all of the operations that it provides.

solution = plat.Solution(problem)

By using the Solution() constructor and passing in our earlier instantiated problem, the solution is initialised with the correct number of variables and objectives. We can check this ourselves.

print(f"This solution's variables are set to:\n{solution.variables}")

print(f"This solution has {len(solution.variables)} variables")

This solution's variables are set to: [None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None, None] This solution has 30 variables

print(f"This solution's objectives are set to:\n{solution.objectives}")

print(f"This solution has {len(solution.objectives)} objectives")

This solution's objectives are set to: [None, None] This solution has 2 objectives

Earlier in this section we randomly generated 30 problem variables and stored them in the variable x. Let's assign this to our variables and check that it works.

solution.variables = x

print(f"This solution's variables are set to:\n{solution.variables}")

This solution's variables are set to: [0.3146922 0.18069651 0.8669023 0.90181727 0.51662277 0.22707642 0.88705032 0.34286548 0.41625942 0.70440383 0.03021398 0.70399831 0.00460576 0.36639118 0.57878733 0.99144161 0.17477581 0.76024859 0.18537833 0.65383628 0.35665724 0.45243136 0.97317158 0.46832485 0.86421827 0.27234544 0.31520583 0.16295762 0.64142004 0.49828092]

Now we can invoke the evaluate() method which will use the assigned problem to evaluate the problem variables and calculate the objective values. We can print these out afterwards to see the results.

solution.evaluate()

print(solution.objectives)

[0.314692198381057, 4.183957223498328]

These objectives values should be the same as the ones that were calculated by our own implementation of ZDT1, within some margin of error.

Conclusion

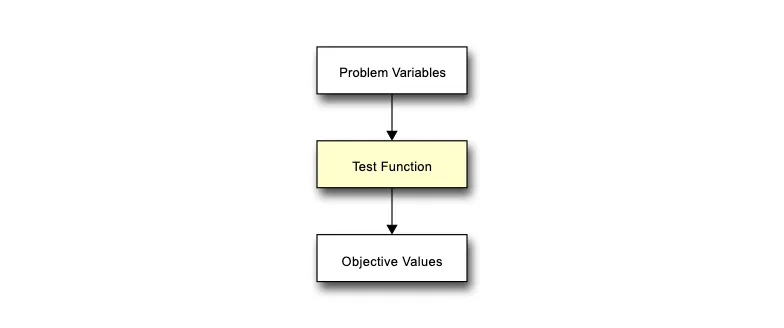

In this section, we introduced a framework for multi-objective optimisation and analysis. We used it to create an instance of the ZDT1 test problem, which we then used to initialise an empty solution. We then assigned randomly generated problem variables to this solution and evaluated it with the ZDT test function to determine the objective values.

Exercise

Using the framework introduced in this section, evaluate a number of randomly generated solutions for ZDT2, ZDT3, ZDT4, and ZDT6.

-

E. Zitzler, K. Deb, and L. Thiele. Comparison of Multiobjective Evolutionary Algorithms: Empirical Results. Evolutionary Computation, 8(2):173-195, 2000 ↩