Preamble

You may be missing the imblearn package below. With anaconda, you can grab it using conda install -c conda-forge imbalanced-learn

import numpy as np

import pandas as pd

import plotly.graph_objects as go

from imblearn.over_sampling import ADASYN

Introduction

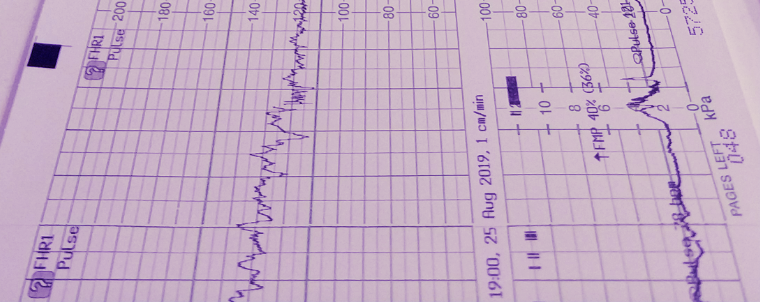

We've covered class imbalance and over sampling in another section, so this will just serve as another example on a different dataset. In this section, we'll be using the Cardiotocography (CTG) dataset located at https://archive.ics.uci.edu/ml/datasets/cardiotocography. It has 23 attributes, 2 of which are two different classifications of the same samples, CLASS (1 to 10) and NSP (1 to 3).

Downloading the Dataset

To keep this notebook independant, we will download the CTG dataset within our code. If you've already downloaded it and viewed it using some spreadsheet software, you will have noticed that we want to use the "Data" spreadsheet, and that we also want to drop he first row.

url = (

"https://archive.ics.uci.edu/ml/machine-learning-databases/00193/CTG.xls"

)

data = pd.read_excel(url, sheet_name="Data", header=1)

Let's have a quick look at what we've downloaded.

data.head()

| b | e | AC | FM | UC | DL | DS | DP | DR | Unnamed: 9 | ... | E | AD | DE | LD | FS | SUSP | Unnamed: 42 | CLASS | Unnamed: 44 | NSP | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 240.0 | 357.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | NaN | ... | -1.0 | -1.0 | -1.0 | -1.0 | 1.0 | -1.0 | NaN | 9.0 | NaN | 2.0 |

| 1 | 5.0 | 632.0 | 4.0 | 0.0 | 4.0 | 2.0 | 0.0 | 0.0 | 0.0 | NaN | ... | -1.0 | 1.0 | -1.0 | -1.0 | -1.0 | -1.0 | NaN | 6.0 | NaN | 1.0 |

| 2 | 177.0 | 779.0 | 2.0 | 0.0 | 5.0 | 2.0 | 0.0 | 0.0 | 0.0 | NaN | ... | -1.0 | 1.0 | -1.0 | -1.0 | -1.0 | -1.0 | NaN | 6.0 | NaN | 1.0 |

| 3 | 411.0 | 1192.0 | 2.0 | 0.0 | 6.0 | 2.0 | 0.0 | 0.0 | 0.0 | NaN | ... | -1.0 | 1.0 | -1.0 | -1.0 | -1.0 | -1.0 | NaN | 6.0 | NaN | 1.0 |

| 4 | 533.0 | 1147.0 | 4.0 | 0.0 | 5.0 | 0.0 | 0.0 | 0.0 | 0.0 | NaN | ... | -1.0 | -1.0 | -1.0 | -1.0 | -1.0 | -1.0 | NaN | 2.0 | NaN | 1.0 |

5 rows × 46 columns

Preparing the Dataset

Looking at the data, we can see the last 3 rows are not samples

data.tail()

| b | e | AC | FM | UC | DL | DS | DP | DR | Unnamed: 9 | ... | E | AD | DE | LD | FS | SUSP | Unnamed: 42 | CLASS | Unnamed: 44 | NSP | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2124 | 1576.0 | 3049.0 | 1.0 | 0.0 | 9.0 | 0.0 | 0.0 | 0.0 | 0.0 | NaN | ... | 1.0 | -1.0 | -1.0 | -1.0 | -1.0 | -1.0 | NaN | 5.0 | NaN | 2.0 |

| 2125 | 2796.0 | 3415.0 | 1.0 | 1.0 | 5.0 | 0.0 | 0.0 | 0.0 | 0.0 | NaN | ... | -1.0 | -1.0 | -1.0 | -1.0 | -1.0 | -1.0 | NaN | 1.0 | NaN | 1.0 |

| 2126 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2127 | NaN | NaN | NaN | NaN | NaN | 0.0 | 0.0 | 0.0 | 0.0 | NaN | ... | 72.0 | 332.0 | 252.0 | 107.0 | 69.0 | 197.0 | NaN | NaN | NaN | NaN |

| 2128 | NaN | NaN | NaN | 564.0 | 23.0 | 16.0 | 1.0 | 4.0 | 0.0 | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

5 rows × 46 columns

So we will get rid of them.

data = data.drop(data.tail(3).index)

Let's check to make sure they're gone.

data.tail()

| b | e | AC | FM | UC | DL | DS | DP | DR | Unnamed: 9 | ... | E | AD | DE | LD | FS | SUSP | Unnamed: 42 | CLASS | Unnamed: 44 | NSP | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2121 | 2059.0 | 2867.0 | 0.0 | 0.0 | 6.0 | 0.0 | 0.0 | 0.0 | 0.0 | NaN | ... | 1.0 | -1.0 | -1.0 | -1.0 | -1.0 | -1.0 | NaN | 5.0 | NaN | 2.0 |

| 2122 | 1576.0 | 2867.0 | 1.0 | 0.0 | 9.0 | 0.0 | 0.0 | 0.0 | 0.0 | NaN | ... | 1.0 | -1.0 | -1.0 | -1.0 | -1.0 | -1.0 | NaN | 5.0 | NaN | 2.0 |

| 2123 | 1576.0 | 2596.0 | 1.0 | 0.0 | 7.0 | 0.0 | 0.0 | 0.0 | 0.0 | NaN | ... | 1.0 | -1.0 | -1.0 | -1.0 | -1.0 | -1.0 | NaN | 5.0 | NaN | 2.0 |

| 2124 | 1576.0 | 3049.0 | 1.0 | 0.0 | 9.0 | 0.0 | 0.0 | 0.0 | 0.0 | NaN | ... | 1.0 | -1.0 | -1.0 | -1.0 | -1.0 | -1.0 | NaN | 5.0 | NaN | 2.0 |

| 2125 | 2796.0 | 3415.0 | 1.0 | 1.0 | 5.0 | 0.0 | 0.0 | 0.0 | 0.0 | NaN | ... | -1.0 | -1.0 | -1.0 | -1.0 | -1.0 | -1.0 | NaN | 1.0 | NaN | 1.0 |

5 rows × 46 columns

Now let's create a dataframe that only contains our input features and our desired classification. In the spreadsheet we know our 21 features were labelled with the numbers 1 to 21, starting from the column LB and ending on the column Tendency. Let's display all the columns in our dataframe.

data.columns

Index(['b', 'e', 'AC', 'FM', 'UC', 'DL', 'DS', 'DP', 'DR', 'Unnamed: 9', 'LB',

'AC.1', 'FM.1', 'UC.1', 'DL.1', 'DS.1', 'DP.1', 'ASTV', 'MSTV', 'ALTV',

'MLTV', 'Width', 'Min', 'Max', 'Nmax', 'Nzeros', 'Mode', 'Mean',

'Median', 'Variance', 'Tendency', 'Unnamed: 31', 'A', 'B', 'C', 'D',

'E', 'AD', 'DE', 'LD', 'FS', 'SUSP', 'Unnamed: 42', 'CLASS',

'Unnamed: 44', 'NSP'],

dtype='object')There are many ways to reduce all of these columns down to the 21 we want. In this notebook, we're going to be explicit with our column selections to ensure no errors can be introduced from a change in the external spreadsheet data.

columns = [

"LB",

"AC.1",

"FM.1",

"UC.1",

"DL.1",

"DS.1",

"DP.1",

"ASTV",

"MSTV",

"ALTV",

"MLTV",

"Width",

"Min",

"Max",

"Nmax",

"Nzeros",

"Mode",

"Mean",

"Median",

"Variance",

"Tendency",

]

features = data[columns]

Let's have a peek at our new dataframe.

features.head()

| LB | AC.1 | FM.1 | UC.1 | DL.1 | DS.1 | DP.1 | ASTV | MSTV | ALTV | ... | Width | Min | Max | Nmax | Nzeros | Mode | Mean | Median | Variance | Tendency | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 120.0 | 0.000000 | 0.0 | 0.000000 | 0.000000 | 0.0 | 0.0 | 73.0 | 0.5 | 43.0 | ... | 64.0 | 62.0 | 126.0 | 2.0 | 0.0 | 120.0 | 137.0 | 121.0 | 73.0 | 1.0 |

| 1 | 132.0 | 0.006380 | 0.0 | 0.006380 | 0.003190 | 0.0 | 0.0 | 17.0 | 2.1 | 0.0 | ... | 130.0 | 68.0 | 198.0 | 6.0 | 1.0 | 141.0 | 136.0 | 140.0 | 12.0 | 0.0 |

| 2 | 133.0 | 0.003322 | 0.0 | 0.008306 | 0.003322 | 0.0 | 0.0 | 16.0 | 2.1 | 0.0 | ... | 130.0 | 68.0 | 198.0 | 5.0 | 1.0 | 141.0 | 135.0 | 138.0 | 13.0 | 0.0 |

| 3 | 134.0 | 0.002561 | 0.0 | 0.007682 | 0.002561 | 0.0 | 0.0 | 16.0 | 2.4 | 0.0 | ... | 117.0 | 53.0 | 170.0 | 11.0 | 0.0 | 137.0 | 134.0 | 137.0 | 13.0 | 1.0 |

| 4 | 132.0 | 0.006515 | 0.0 | 0.008143 | 0.000000 | 0.0 | 0.0 | 16.0 | 2.4 | 0.0 | ... | 117.0 | 53.0 | 170.0 | 9.0 | 0.0 | 137.0 | 136.0 | 138.0 | 11.0 | 1.0 |

5 rows × 21 columns

Let's also create a new dataframe to store our labels, the NSP column, which contains our desired classifications.

labels = data["NSP"]

Again, we'll check to see what we've created.

labels.head()

0 2.0 1 1.0 2 1.0 3 1.0 4 1.0 Name: NSP, dtype: float64

Frequency of each Classication

Now let's see how many samples we have for each class.

value_counts = labels.value_counts()

fig = go.Figure()

fig.add_bar(x=value_counts.index, y=value_counts.values)

fig.show()

We can see that our dataset is imbalanced, with class 1 having a significantly higher number of samples. This may be a problem for us depending on our approach, so we may want to balance this dataset before continuing our study.

Oversampling

We could perform over-sampling using an implementation of the Adaptive Synthetic (ADASYN) sampling approach in the imbalanced-learn library. We will pass in our features and labels variables, and expect outputs that have been resampled for balanced frequency.

features_resampled, labels_resampled = ADASYN().fit_resample(features, labels)

Let's see how many samples we have for each class this time.

labels_resampled = pd.DataFrame(labels_resampled, columns=["NSP"])

value_counts = labels_resampled["NSP"].value_counts()

fig = go.Figure()

fig.add_bar(x=value_counts.index, y=value_counts.values)

fig.show()

Much better!

Conclusion

In this section, we've used adaptive synthetic sampling to resample and balance our CTG dataset. The output is a balanced dataset, however, it's important to remember that these approaches should only be applied to training data, and never to data that is to be used for testing. For your own experiments, make sure you only apply an approach like this after you have already split your dataset and locked away the test set for later.